This post may get a bit heavier than my previous post. I didn’t expect Part 1 to get so much interest.

If you missed part 1, you can find it here.

There’s also an introduction to Terraform here.

MultiCloud networking is so interesting and there is so much to learn. When I was studying for my CCIE more than 15 years ago, there was only 1 way to learn: by doing. Experience is important, but there’s no way to get exposed to every nuance without testing and failing and testing again. That natural curiosity is the differentiator between good engineers and great engineers.

For me there are three objectives with these posts:

- Get a deeper understanding of Terraform, the defacto IaC solution

- Get a deeper exposure to Aviatrix, a technology I really like

- Get a deeper understanding of CSP networking constructs

I hope you enjoy this post. Don’t forget to comment or like.

1. What are we going to build?

In the last post, we deployed a simple hub and spoke infrastructure in AWS.

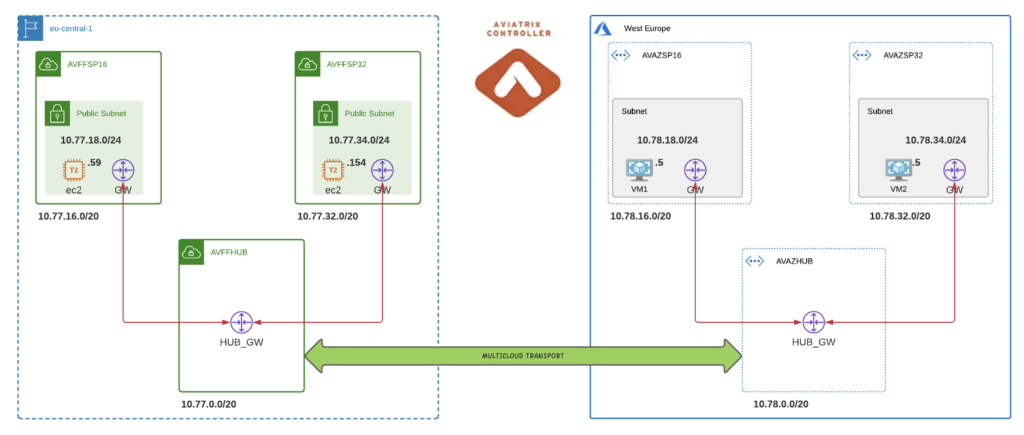

This time we are going to create an identical infrastructure in Azure and use the Aviatrix MultiCloud Transit to seamlessly interconnect the 2 clouds.

The significance of this should not be overlooked. We are using a common overlay deployed across 2 clouds (AWS and Azure), with all routing constructs built automatically using a common Terraform provider. The entire infrastructure is built in 20 minutes.

Below is a diagram of what will get built.

2. What’s the code?

You can find the code here.

I was in 2 minds whether to explain the code here or not.

In the end, I decided not to. My rationale is that either you have a background in Terraform (in which care you may indeed criticize the quality of my code) or you don’t know Terraform well yet, in which case my explanations would drown out the message of this post.

As a compromise, if you do have difficulty with the code, just drop a comment below, and I will be glad to connect.

#Build the AWS Transit

module "transit_aws_1" {

source = "terraform-aviatrix-modules/aws-transit/aviatrix"

version = "v4.0.3"

cidr = "10.77.0.0/20"

region = "eu-central-1"

account = "Eskimoo"

name = "avffhub"

ha_gw = "false"

instance_size = "t2.micro"

}

#Build the AWS Spokes

module "spoke_aws" {

source = "Eskimoodigital/aws-spoke-ec2/aviatrix"

version = "1.0.14"

count = 2

name = "avffsp${count.index}"

cidr = var.spoke_cidrs[count.index]

region = "eu-central-1"

account = "Eskimoo"

transit_gw = "avx-avffhub-transit"

vpc_subnet_size = "24"

ha_gw = "false"

instance_size = "t2.micro"

ec2_key = "KP_AVI_EC2_SPOKE"

}

#Build the Azure Transit

module "transit_azure_1" {

source = "terraform-aviatrix-modules/azure-transit/aviatrix"

version = "4.0.1"

cidr = "10.78.0.0/20"

region = "West Europe"

account = "EskimooAzure"

ha_gw = "false"

name = "avazhub"

instance_size = "Standard_B1ms"

}

#Build the Azure Spokes

module "spoke_azure_1" {

# source = "terraform-aviatrix-modules/azure-spoke/aviatrix"

# version = "4.0.1"

source = "github.com/Eskimoodigital/Eskimoo_Tfm_Avi_Azu_Spoke_wVM"

count = 2

name = "avazsp${count.index}"

cidr = var.azure_spoke_cidrs[count.index]

region = "West Europe"

account = "EskimooAzure"

transit_gw = "avx-avazhub-transit"

vnet_subnet_size = "24"

ha_gw = "false"

instance_size = "Standard_B1ms"

}

#Build the AWS Azure Transit Peering

module "transit-peering" {

source = "terraform-aviatrix-modules/mc-transit-peering/aviatrix"

version = "1.0.4"

transit_gateways = [

"avx-avazhub-transit",

"avx-avffhub-transit",

]

}

#Build the Azure VMs

module "vm_azure_1" {

# source = "terraform-aviatrix-modules/azure-spoke/aviatrix"

# version = "4.0.1"

source = "github.com/Eskimoodigital/module_azure_vm"

count = 2

name = "vmazsp${count.index}"

cidr = module.spoke_azure_1[count.index].vnet.subnets[0].subnet_id

nic_name = "vmnic${count.index}"

ip_name = "vmip${count.index}"

rg_name = "vmrg${count.index}"

publicip_name = "vmpip${count.index}"

}

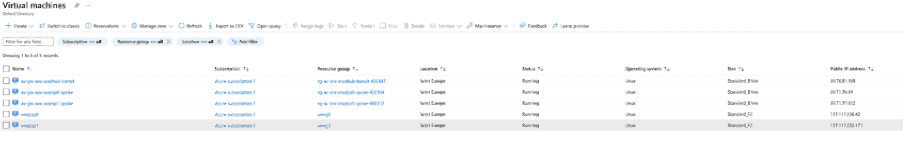

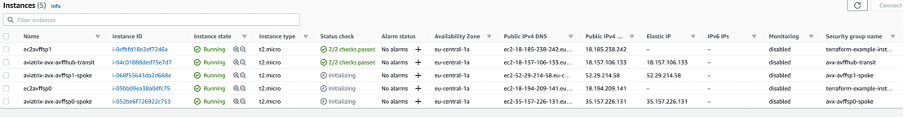

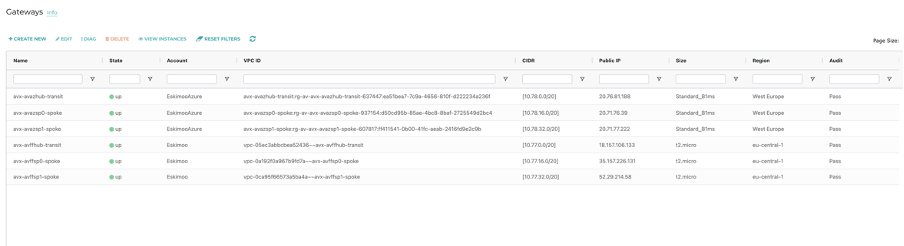

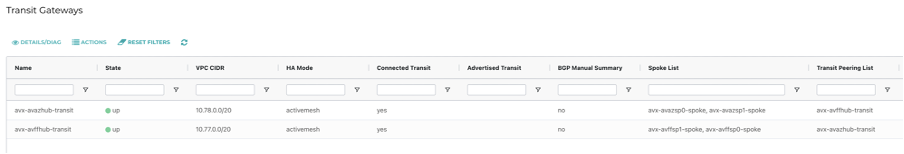

Here is an outline of what gets built:

3. So what?

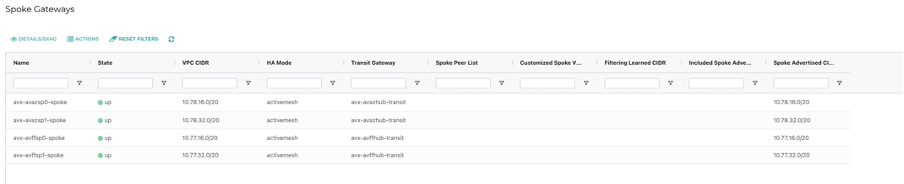

Well, let’s have a look at the complexity that’s hidden under the covers.

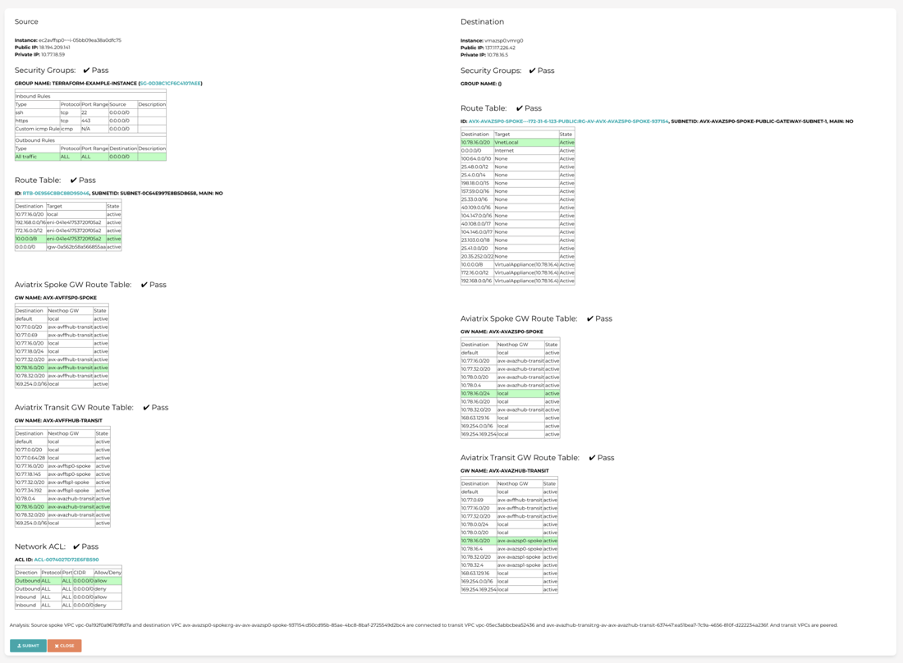

In each VNET or VPC created, the underlying route tables are automatically populated appropriately. There are no manual routing updates or route tables to create. It’s all taken care of. As are the IPSec tunnels between gateways.

4. So what’s the result?

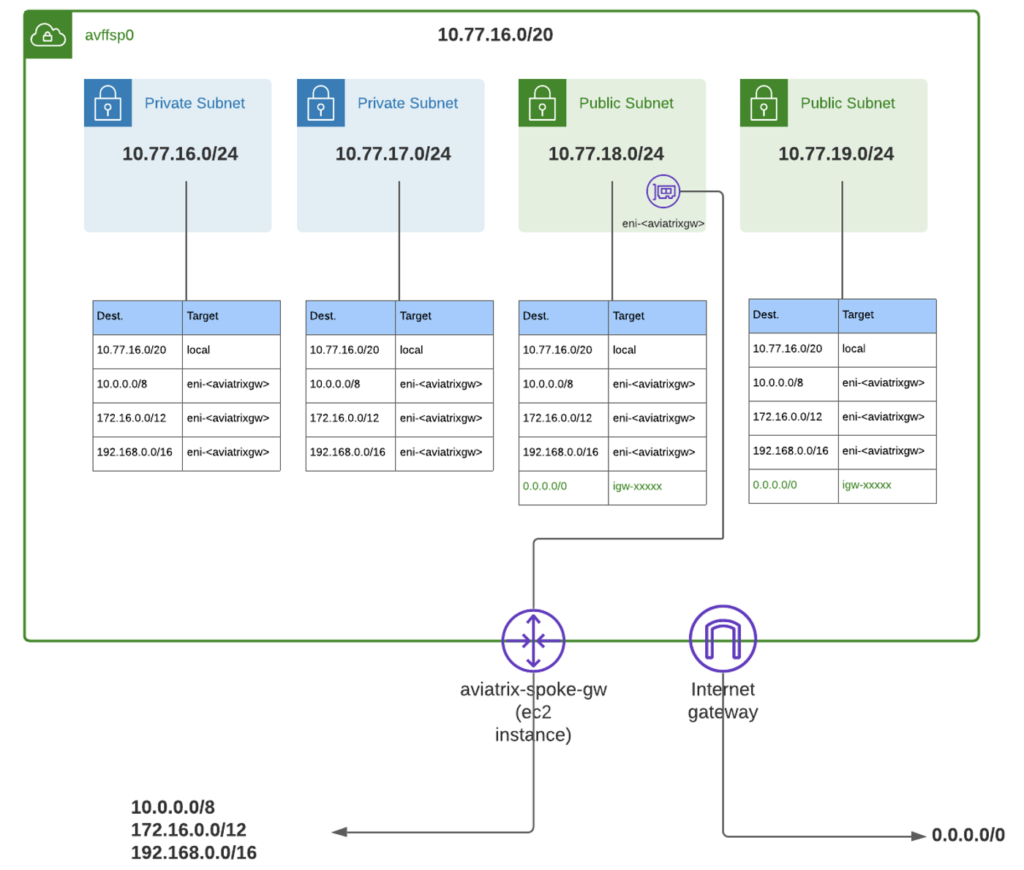

Once everything is built, we can SSH to a VM in Azure and check the connectivity.

Note: I have only deployed the Terraform code at this stage. I have done no other modifications to make this work.

As you can see below, we have out-of-the-box connectivity using the private IP addresses of the VMs and EC2 instances inter-cloud!

This is significant from an abstraction point of view.

A traceroute from AWS to Azure shows the intermediary hops going on the overlay inter-cloud.

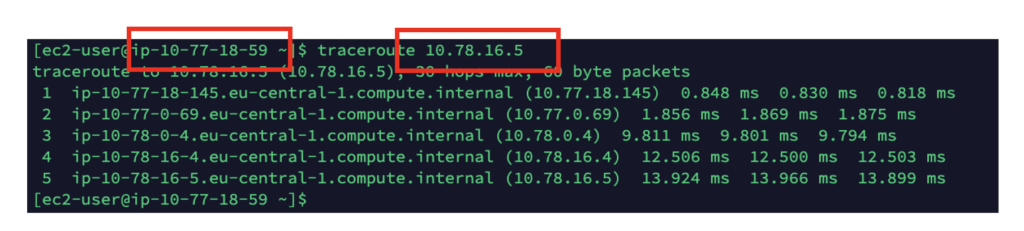

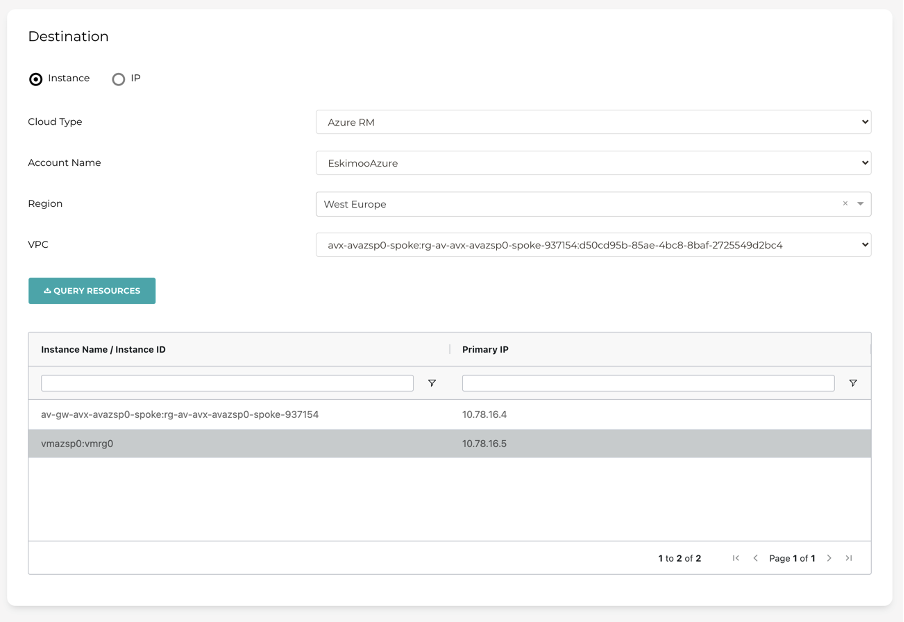

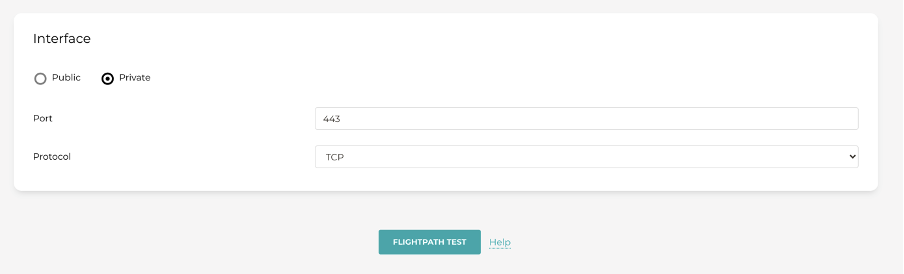

FlightPath also highlights the complexity of what has been automatically built.

5. What’s next?

Keeping on the theme there are a number of approaches for the next labs.

- Firenet

- Egress FQDN filtering

- Site2Cloud

- Extend into GCP

Depending on time and feedback, I’ll be following up with something else.

Thanks for reading.

[…] Building an Aviatrix Lab using Terraform Aviatrix Lab Build using Terraform – Part 2 […]